Coming back to the firmwareplatform series I’d like to talk about the kind of infrastructure we should have in place to put a platform to good use. The issues we’ll look at in this post should not be showstoppers in most cases (in fact I’ve seen larger platforms where only the target, that was to be released next was kept in a runnable state), however: The four issues presented in this article will go a long way towards earning a sizeable ROI for the platform.

Continuous Integration

A robust CI system is the cornerstone for all our infrastructure needs (I take an SCM for a given here!). The solution that needs to be designed should be able to:

- Granuarily discern different stages (i.e. for a checkin-build the system should not run expensive tests, however this might be useful for a nightly build)

- Support different grades of code maturity and run tests accordingly. I.e. we should differenciate between a staging/integration environment, a production environment and a development environment.

- Support different test-targets, i.e. we might want to run certain tests on physical hardware, whereas others might work on emulated hardware as well. Our CI system should be able to allow for easily setting up these kinds of testplans.

Continuous Testing

Given that we aim for a Gen3 platform we should be able to basically deploy to production (i.e. produce a releasable binary!) at any given point in time. To enable this we cannot afford any manual tests at all during acceptance-, regression- and releasetests. In fact, the only time when manual tests are appropiate is during development of a given function (i.e. when the developer tries to figure out how a given feature should work) and during development of the automatted tests for the feature (i.e.when the testerdeveloper tries to figure out how the feature is implemented). Ideally the latter part would never happen, as the test should be developed against a specification, however in “the real world”(tm) there will usually be implementation details that have not made it into the spec, but that will have influence on the tests, so the testdeveloper will have to have a look at the target to see how it behaves, which will often be by means of a manual test.

Alrighty: Now how does this relate to this post’s topic (Infrastructure)? Given the theory above we need to have the relevant infrastructure in place that actually allows QA to:

- Execute tests in a timely manner (this might need horizontal scaling for on-target tests)

- Develop tests: As this is a development job, QA will need very similar resources/infrastructure as Dev would need, e.g.: Access to an SCM, Buildservers.

- Means to support them if things go pearshaped: Nothing beats having a shiny new cloud-hosted devops toolchain, that does not satisfy the needs of the users.

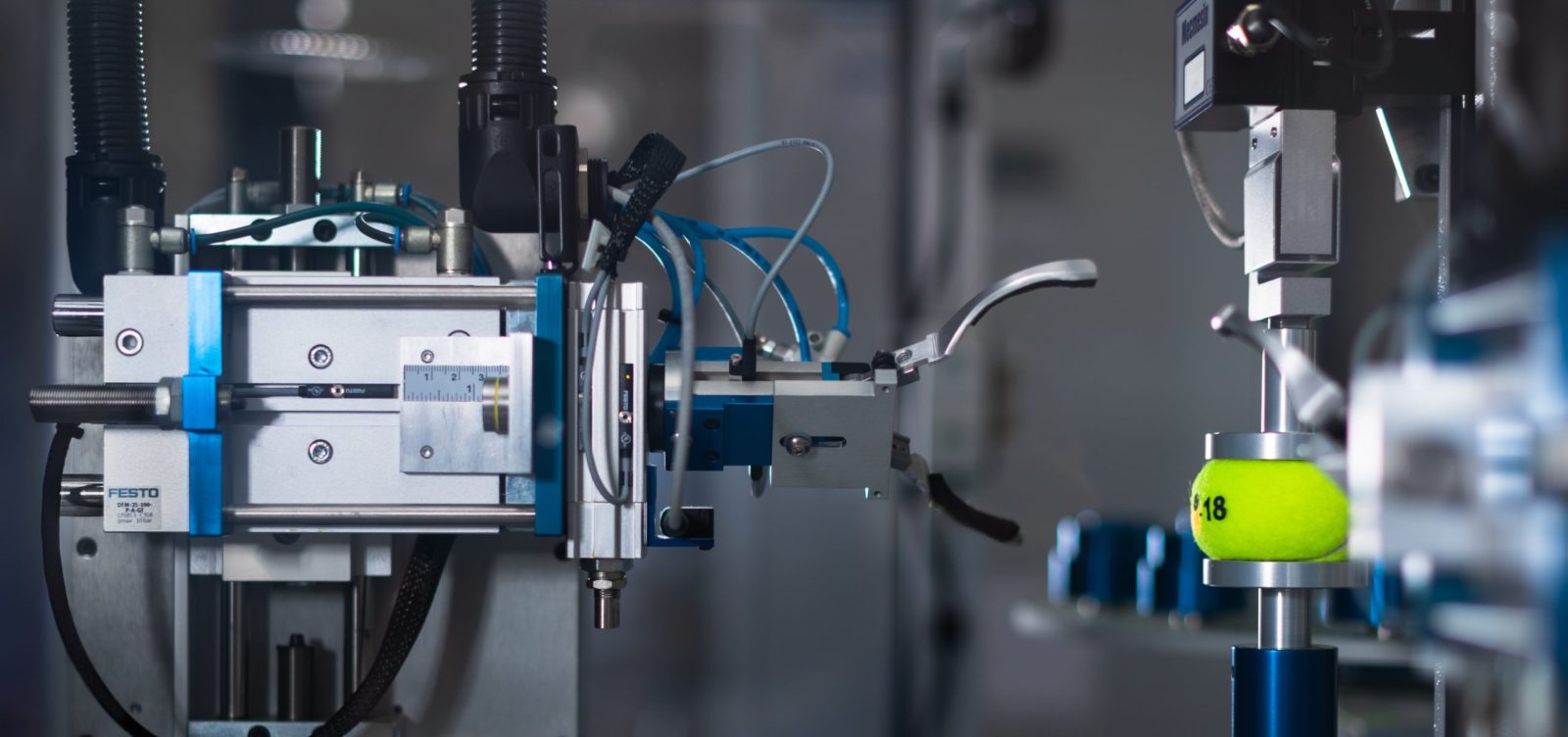

Physical Tests

By “physical tests” I mean tests that run in a production like environment on the real hardware. These are the last tests a bit of code should be subjected to as they are the most expensive ones (in terms of time and resources required). Ideally our infrastructure sports a system that allows for physical tests to be automatically executed. Depending on our use-case this means:

- Enough physical devices to test all relevant hardwarevariants.

- Enough physical devices to allow for parallelization of tests (if necessary!)

- Actuators to stimulate the devices. Since we’re talking embedded hardware we’ll in most cases do some kind of measurement, so we need to be able to stimulate the sensors of the device. Ideally each device in the testsetup has its own complete set of sensors, as this eases parallelization, since we don’t have to check which tests can run on which device given the actuators connected to the device.

Automated Deployment

This is the bit that ties everything together and the single part that can make or break our CI system. For off-target tests this is usually trivially easy, as these tests can in – most cases – be run straight on the buildserver. However, once we get to the unique characteristics of embedded devices in that regard things can get complicated depending on the update mechanism that is chosen for the device in question. Just give you an idea of the different deployment strategies & problems I’ve seen during the last ten years:

- Custom programming device needed to update firmware, target will only boot into application, if the programmer is not attached after power-on.

- Wirelessupdate (Zigbee), targets will lose connection to their controller after update and need to be rejoined using a custom RFID medium.

- Targets need a physical dipswitch be set before entering their updateloader

- Targets need a signal being sent to them within their bootcycle so they stay in the bootloader and accept a new binary. The window during which the signal has to be sent is < 40 ms.

- Targets loose all configuration after firmwareupdate, parts of the configuration must be brought to the target in physical form.

- Binaries must be signed with the vendor’s private key, however production firmware uses a different key than development firmware. Keys are injected into the device during production and cannot be changed (read: Devices wich are intended for development and testing need to be specifically produced!).

- Targets are updateable via ethernet, however the update can only be initiated via http/ WebUI of the target.

- Brittle updatemechanisms of target lead to targets being bricked during or after update requiring custom hardware and/or debugprobes to get them to work again.

One can imagine the headaches these points can produce if one wants to design a reliable testsystem. In the end I’ve seen working testsystems with varying degrees of reliability in all cases above. However the number of hoops QA had to jump through (and the amount of money they burned in the process!) are usually significant – in one case the custom designed testequipment clocked in at about 100k€ a piece excluding the design costs. This cost might be negleglible in the grand scheme of things, especially if we expect to sell hundred thousands of devices, but few department heads will happily shell out that kind of money (or even budget that kind of money as additional development costs of a new device).

The QA costs that can arise, if a device is not designed with testability in mind are an important reason to get QA in the loop as early as possible during the initial development and design phase. They have requirements with respect to the testability that should – at the very least – be considered during the design of the hardware as well as during the design of the software.

Finishing thoughts

The infrastructure part of a modern platform needs very careful design. This is one of the most crucial success drivers of a platform and all stakeholders should be involved as they all will have requirements here. Given that the infrastructure needs to be ready as soon as the first lines of code are being written for the product the the infrastructure needs some leadtime.

Imagecredit: Photo by ThisisEngineering RAEng on Unsplash